Install and Run SQL Server Docker Container on Mac

Like most people, I use Mac , Windows as well Linux OS for development and testing purposes. Primarily I use Mac for Development purpose. I have few projects which uses SQL Server as Data Storage Layer. Setting up Docker Container on Mac and Opening up the ports was pretty easy and doesn’t take more than 10 Minutes.

Steps followed :

- Install Docker

- Pull SQL Server Docker Image

- Run SQL Server Docker Image

- Install mssql Client

- Install Kitematic

- Open the Ports to connect to SQL Server from the network

- Setup port forwarding to enable access outside the network

Install Docker :

Get Docker dmg image and install. Just follow the prompts and its very straight forward.

https://docs.docker.com/docker-for-mac/install/#download-docker-for-mac https://download.docker.com/mac/stable/Docker.dmg

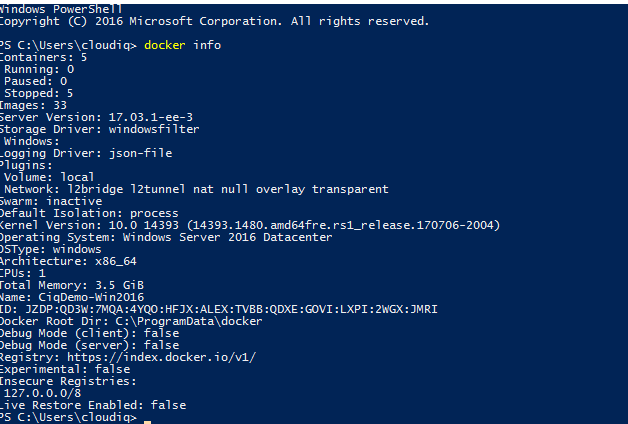

Once you have installed docker , you can verify the installation and version.

bash-3.2$ docker -v

Docker version 17.09.0-ce, build afdb6d4 Pull SQL Server Docker Image ( DEV Version )

docker pull microsoft/mssql-server-linux:2017-latest Create SQL Server Container from the Image and Expose it on port 1433 ( Default Port )

docker run -d --name macsqlserver -e 'ACCEPT_EULA=Y' -e 'SA_PASSWORD=Passw1rd' -e 'MSSQL_PID=Developer' -p 1433:1433 microsoft/mssql-server-linux:2017-latest -d: this launches the container in daemon mode, so it runs in the background

–name name_your_container (macsqlserver): give your Docker container a friendly name, which is useful for stopping and starting containers from the Terminal.

-e ‘ACCEPT_EULA=Y: this sets an environment variable in the container named ACCEPT_EULAto the value Y. This is required to run SQL Server for Linux.

-e ‘SA_PASSWORD=Passw1rd’: this sets an environment variable for the sa database password. Set this to your own strong password. Also required.

-e ‘MSSQL_PID=Developer’: this sets an environment variable to instruct SQL Server to run as the Developer Edition.

-p 1433:1433: this maps the local port 1433 to the container’s port 1433. SQL Server, by default, listens for connections on TCP port 1433.

microsoft/mssql-server-linux: this final parameter tells Docker which image to use

Install SQL Client for MAC

If you don’t have npm installed in Mac, install homebrew and node.

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

brew install node

node -v

npm -v $ npm install -g sql-cli

/usr/local/bin/mssql -> /usr/local/lib/node_modules/sql-cli/bin/mssql

/usr/local/lib

└── [email protected]

$ npm i -g npm Connect to SQL Server Instance

$ mssql -u sa -p Passw1rd

Connecting to localhost...done

sql-cli version 0.6.2

Enter ".help" for usage hints.

mssql> select * from sys.dm_exec_connections Get External Tools to Manage Docker

Kitematic

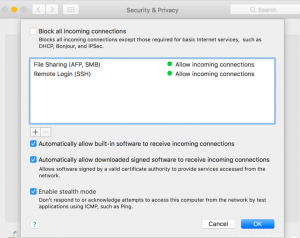

Open Up the Firewall to connect to SQL Server from outside the Host

Ensure your firewall is configured to allow the connections to the SQL Server. I turned of “Block all incoming connections” and enabled “Automatically allow downloaded signed software to receive incoming connections”. Without proper firewall configurations, you won’t be able to connect to the SQL Server outside the host.

Connecting from the Internet ( Port forwarding Setup )

Lets say you want to connect to the SQL Server you setup from outside the network or from anywhere via internet,you can setup port forwarding.

Get your public facing IP and setup the port forwarding for Port 1433 ( SQL Server port you have setup your docker container ). If its setup correctly , you should be able to telnet into that port to verify the connectivity.

telnet 69.11.122.159 1433 Unless you absolutely require it , its very bad idea to expose the SQL Server to internet. It should be behind the network , only your webserver should be accessible via internet.

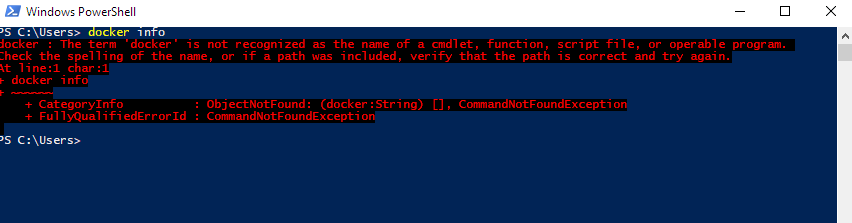

Troubleshooting :

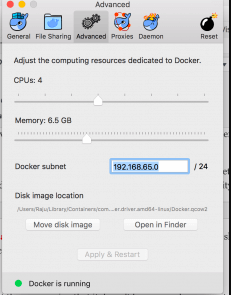

While launching docker container , if you get the error saying that it doesn’t have enough memory to launch SQL Server Container, go ahead and modify the memory allocation for docker container.

- This image requires Docker Engine 1.8+ in any of their supported platforms.

- At least 3.25 GB of RAM. Make sure to assign enough memory to the Docker VM if you’re running on Docker for Mac or Windows.

I have setup this way.

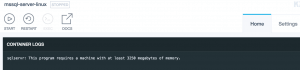

If you don’t provision enough memory, you will error like this.

Look into Docker logs

Following command ( docker ps -a and docker logs mcsqlserver ) shows list of running processes and docker logs.

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9ea3a24563f9 microsoft/mssql-server-linux:2017-latest "/bin/sh -c /opt/m..." About an hour ago Up About an hour 0.0.0.0:1433->1433/tcp macsqlserver

$ docker logs macsqlserver

2017-10-08 23:06:52.29 Server Setup step is copying system data file

'C:\templatedata\master.mdf' to '/var/opt/mssql/data/master.mdf'.

2017-10-08 23:06:52.36 Server Setup step is copying system data file

'C:\templatedata\mastlog.ldf' to '/var/opt/mssql/data/mastlog.ldf'.

2017-10-08 23:06:52.36 Server Setup step is copying system data file

'C:\templatedata\model.mdf' to '/var/opt/mssql/data/model.mdf'.

2017-10-08 23:06:52.38 Server Setup step is copying system data file

'C:\templatedata\modellog.ldf' to '/var/opt/mssql/data/modellog.ldf'.

Security:

I highly recommend to create least privileged accounts and disable SA login. If you are exposing your SQL Server to internet, there are ton of hacking and pentest tools which uses sa login for brute force attack.